•

Event driven navigation pattern in iOS

10 minutes read

Who this article is for

This article is for iOS developers exploring app navigation patterns. If you’ve been wondering whether SwiftUI is suitable for navigation or if you should stick with UIKit, this article will provide valuable insights and a solution that worked for me.

It is also aimed at developers who want to see an example of SwiftUI-only navigation for iOS. The existing solution can be adapted to a non-Event-Driven Architecture (EDA) by replacing events with method calls to a router object, such as router.navigate(..). The key difference is that instructing the router where to navigate is imperative, while sending an event is reactive.

Introduction

Based on my current work, I am developing app navigation using an Event-Driven Architecture (EDA).

In EDA theory, events are categorized into the following types:

- Commands: Events that trigger an action.

- Events: Events that notify about a change.

- Documents: Events that store data.

- Queries: Events that request data.

For the iOS app, I have defined the following events that can influence navigation. Note that queries and documents are not utilized in the navigation layer of the app.

- User Commands: Actions initiated by the user, such as logging in, logging out, or playing a song.

- System Events: Events triggered by the system, such as the system finishing its launch or receiving a deep link.

- Application Events: Events triggered by application services, such as shuffling songs from a playlist, authorizing a service, or deauthorizing a service. Application events can be responses to user commands or system events.

Code

Before delving into the details, here is the final code for a simple SwiftUI coordinator—a View that manages navigation within the app.

struct MainCoordinator: View {

let compositor: CompositionRoot

@ObservedObject var viewModel: NavigationViewModel

var body: some View {

ZStack {

switch viewModel.root {

case .launch:

LaunchView()

case .serviceSelection:

ServiceSelectionView()

case .playlistSelection:

NavigationStack {

ChoosePlaylistView()

.environmentObject(compositor.choosePlaylistViewModel)

.navigationDestination(

isPresented: $viewModel.isFocusPlaylistPresented,

destination: {

GeneratedPlaylistView()

.environmentObject(compositor.generatedPlaylisViewModel)

}

)

}

.sheet(isPresented: $viewModel.isSpotifyDeviceActivationPresented) {

DeviceActivationView()

.environmentObject(compositor.deviceActivationViewModel)

.presentationDetents([.medium])

.presentationDragIndicator(.visible)

}

}

ErrorNotificationView()

.environmentObject(compositor.errorViewModel)

}

}

}

The list of all commands and events that are listened by the NavigationViewModel

DomainEvent.SpotifyAuthorizationUpdated

DomainEvent.LaunchFinished

DomainEvent.SpotifyAuthURLGenerated

Command.PlaylistSelected

Command.OpenAnotherAppRequested

Command.DidRequestOpenPlaylistOnSpotify

Command.PlayOnSpotifyRequested

DomainEvent.SpotifyPremiumOnlyActionAttempted

Navigation layer

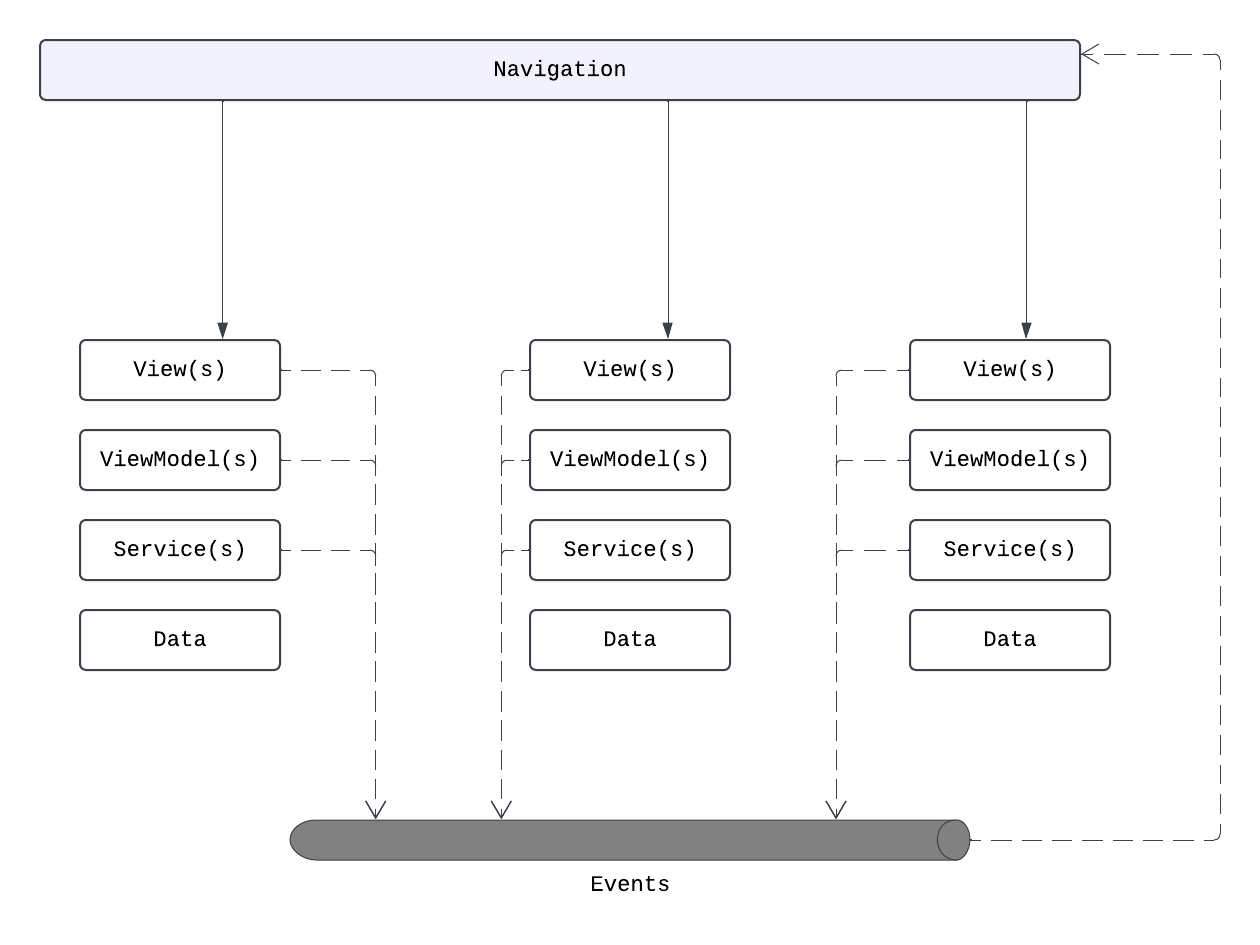

In an N-tier architecture, the navigation layer is the topmost layer of the application. It is responsible for switching between views and serves as the entry point of the app, being the first layer initialized when the app starts.

The tiers are structured as follows:

- Navigation Layer: The top layer of the app, responsible for switching between views.

- Presentation Layer: Contains the views and view models.

- Domain Layer: Contains the service classes and domain models.

- Data Layer: Contains the repositories.

Since the navigation layer is responsible for creating views, it has knowledge of all the layers below it. It creates the views, listens to domain events and commands, and decides what to present next to the user.

By having the NavigationViewModel listen to application events, I can handle events such as SpotifyAuthorizationUpdated and navigate back to a service selection screen where the user will need to reauthorize the service. Additionally, an error message will be displayed. This navigation logic resides in the navigation layer, not in the presentation layer (View or ViewModel).

The navigation layer is a cross-cutting concern that unifies different domains of the app. It is the glue that holds the app together.

Modeling the navigation state

The NavigationViewModel can be modeled in any way that fits better for each application separately. If I have to display only a stack of views, then it will contain an array of data. If the app has more complicated navigation paths, then the ViewModel will look more complicated.

For simplicity, I used an enum to define the possible root views and a list of flags to model which screen is displayed at the moment.

class NavigationViewModel: ObservableObject {

@Published @MainActor var root: RootView = .launch

@Published @MainActor var isFocusPlaylistPresented: Bool = false

@Published @MainActor var isSpotifyDeviceActivationPresented: Bool = false

With this approach, it leaves the Coordinator implementation to decide how to display each flag, where each flag can also be a bi-directional Binding and used in SwiftUI as a flag to display a sheet. Closing the sheet from the SwiftUI side will also update the NavigationViewModel. But I have to maintain the flags and make sure to turn them on/off if I have to display a single screen at a time.

Important

NavigationViewModel is the single source of truth for the navigation state of the app. It is testable, it is simple, and it is easy to understand.

Sending parameters between screen

The best code is the one that doesn’t exist. — With this principle in mind, I prefer to avoid passing parameters between screens whenever possible.

case .playlistSelection:

NavigationStack {

ChoosePlaylistView()

.environmentObject(compositor.choosePlaylistViewModel)

.navigationDestination(

isPresented: $viewModel.isFocusPlaylistPresented,

destination: {

GeneratedPlaylistView()

.environmentObject(compositor.generatedPlaylisViewModel)

}

)

}

The reader can note that the ChoosePlaylistView and GeneratedPlaylistView do not send data between them.

I challenged myself - design communication between screens that doesn’t require sending any data from one View to another.

This is hard to think about because the usual thinking of iOS developers is not even near to such an idea. But I think that arguments couple the Views and make it harder to experiment with different navigation patterns or to even switch to different navigation flows.

Screens communicate between them by sharing the same object that provides data. Usually a repository class form the data layer or same service object. But because this is event driven architecture - each ViewModel will get data from the EventBus in a reactive way:

- ChoosePlaylistView tells EventBus - user selected a playlist by id

- MusicService receives the event and fetches the playlist songs

- NavigationModel receives the event and changes the state to display the GeneratedPlaylistView

- GeneratedPlaylistView listens to the EventBus for the playlist data

- MusicService sends the generated playlist to the EventBus

- GeneratedPlaylistView receives the playlist and displays it

MusicService is responsible for providing data by id. It is using objects implementing repository pattern to cache data in memory or on a local database.

The reader can note that the ChoosePlaylistView and GeneratedPlaylistView do not send data between them.

I challenged myself to design communication between screens that doesn’t require sending any data from one View to another.

This approach is unconventional for many iOS developers, as it deviates from the typical practice of passing parameters between views. However, I believe that passing arguments couples the views and makes it harder to experiment with different navigation patterns or switch to different navigation flows.

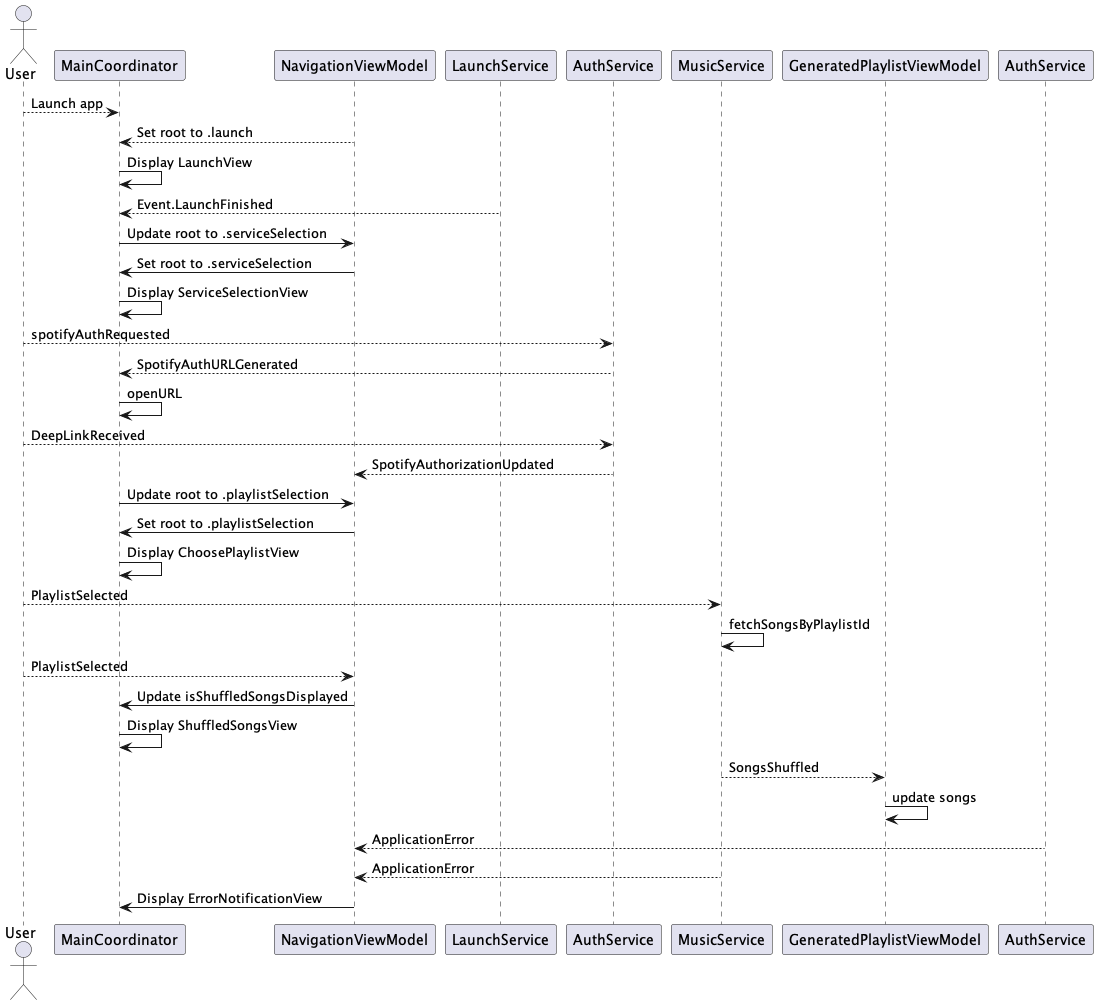

Screens communicate by sharing the same object that provides data, typically a repository class from the data layer or a service object. In this event-driven architecture, each ViewModel retrieves data from the EventBus in a reactive manner:

- ChoosePlaylistView notifies the EventBus that the user has selected a playlist by its ID.

- MusicService receives the event and fetches the playlist songs.

- NavigationModel receives the event and updates the state to display the GeneratedPlaylistView.

- GeneratedPlaylistView listens to the EventBus for the playlist data.

- MusicService sends the generated playlist to the EventBus.

- GeneratedPlaylistView receives the playlist and displays it.

The MusicService is responsible for providing data by ID. It uses objects implementing the repository pattern to cache data in memory or a local database.

This design ensures that views remain decoupled, making it easier to experiment with different navigation patterns and flows.

Displaying a modal view on top of any screen

.sheet(isPresented: $viewModel.isSpotifyDeviceActivationPresented) {

DeviceActivationView()

.environmentObject(compositor.deviceActivationViewModel)

.presentationDetents([.medium])

.presentationDragIndicator(.visible)

}

The DeviceActivationView is a view that is displayed as a sheet. The flag for controlling its presentation is stored in the NavigationViewModel and it is sent to the .sheet function as a Binding. When the user closes the sheet, the flag is set to false by the SwiftUI and the sheet is dismissed.

The Coordinator, being a SwiftUI view, controls how a View is displayed. It can manage the presentation of views in various forms, such as:

- Sheet

- Alert

- Navigation stack

- Full-screen view

Displaying an error banner on top of any view

var body: some View {

ZStack {

// other views

ErrorNotificationView()

.environmentObject(compositor.errorViewModel)

}

}

ErrorNotificationView is a view that hides/displays itself based on the state of the ErrorViewModel, which listens to the event bus for ApplicationError events. The ErrorNotificationView is a global view that is displayed on top of the navigation stack, so the user can see the error message no matter where they are in the app.

Any service in the app can send an ApplicationError event to the event bus, and the ErrorViewModel will display the error message to the user. ErrorNotificationView handles the logic of auto-hiding based on a timer.

Visualizing the navigation events

The arrows represent the events that can change the state of the app. In code, an event triggered by the user is a Command and it is sent to the eventBus directly from the View that handles the action. Sending commands directly from the Views is a personal decision and another experiment in order to reduce the complexity of the app. Usually, the view will call a method from the ViewModel and the ViewModel will send the command to the eventBus. This is a more common approach, but I think if the view only sends commands, then I can have less boilerplate code in the ViewModel.

Example of sending commands from the view

// file:ConnectSpotifyButton.swift

Button(action: {

eventBus.send(Command.SpotifyAuthorizationRequested())

}

// file:PlaylistsGrid.swift

.onTapGesture {

eventBus.send(Command.PlaylistSelected(playlist.id))

}

Any view has access to the event bus and sends commands to it. Commands are listened by

- Coordinator - to change the navigation state

- Services - to update the state of the app

- ViewModels - to update the state of the view if neccessary

Multi-platform support

Different platforms might require different navigation flows. Complications arrise when the navigation is different with some pieces being the same. The first solution that comes to mind is to try to reuse as much as possible. But because there are just a few platforms to support, and new platforms don’t appear other night, I think the best way here is to have separate Coordinator and NavigationViewModel for each platform.

As Martin Fowler said, copy it three times and only the fourth time refactor it. If I will have to support more than 4 platforms, then I will think about a more generic solution. Currently in Apple world, I have to support iOS and macOS.

Conclusion

I have been experimenting with SwiftUI for a while now, and I have found that it is a good framework for building navigation systems. SwiftUI is a declarative framework that allows me to define your UI in a simple and concise way. By using an event-driven architecture, I can create a navigation system that is easy to understand and maintain. I am hoping to continue the experimentation and see how far I can push SwiftUI to build more complex navigation systems.

A big advantage of using EDA architecture is that the code becomes truly asynchronous. Events can be generated anytime and anywhere. Adding a new view to the system becomes simpler because of the decoupling of the views. The views don’t have to know about each other, they only have to know about the events. Because the views are decoupled, it can be combined in different ways to create different navigation flows. The navigation flow is easy to switch to a different one; for example, different platforms can have different navigation flows.

I don’t think the above solution is following the EDA architecture best practices as described by the book. As I haven’t finished it yet, I am still learning and experimenting with the concepts. I am trying to apply the concepts to the iOS app and see how they work in practice. I am hoping to learn more about EDA and apply it to other projects in the future.